This post is about how to build Bayesian models of Gaussian processes and hidden Markov models in R using Stan, how to fit them to data, and how to compare those fits to see which model is more likely to explain the data. But first we’ll talk background - who cares which of those models best explains some data?

- Background

- Setup

- Gaussian Processes

- Hidden Markov Models

- Model Comparison using Bridge Sampling

- Conclusion

Background

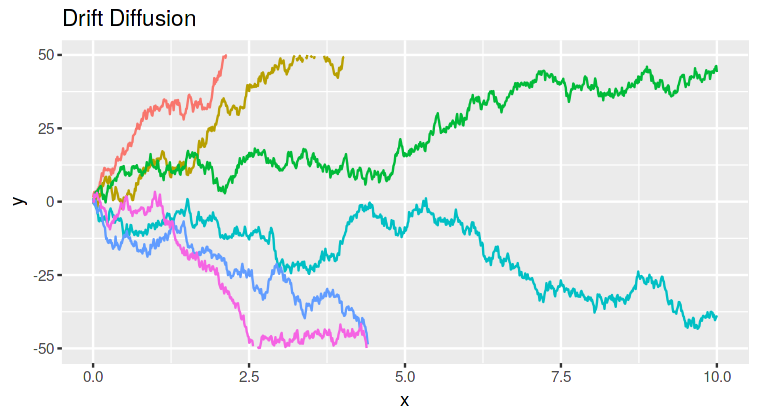

Lots of work in perceptual decision making has led to the hypothesis that perceptual decisions (such as deciding which way an object is moving, or whether an animal is predator or prey) are made by brain networks accumulating evidence for each option until some decision threshold is passed. These evidence acumulation theories assume that brain activity starts at some baseline, and then integrates the incoming evidence for each choice. When a decision threshold is passed, the option corresponding to that threshold is choosen. Lots of work has shown that models built in this way (called drift-diffusion models) explain perceptual decisions very well.

For example, the image below shows simulations of such an integration process. Each line is a different trial or decision.

However, there are other types of decisions besides purely perceptual ones. Value-based decisions are higher-level decisions between actions which may result in outcomes that have different values to the chooser (like choosing what product to buy, which stock to invest in, or where to go for lunch). These decisions are different from perceptual decisions because the evidence for one option over the other usually isn’t coming directly from the senses. With value-based decisions the chooser is using internal information as evidence: memories, background knowledge, and previously-learned values.

Some people have suggested that despite the difference in where the evidence is coming from, value-based decisions might also be made in the brain using this accumulation-to-threshold algorithm. However, others have hypothesized that instead the brain may simply sample the options serially, and choose one after enough sampling. This makes sense intuitively - if you’re deciding between two products to buy, it seems like you consider one option, then the other, and then maybe consider the first option again, etc, until you’ve decided which option to take. The accumulation-to-threshold algorithm implies that both options are considered simultaneously!

To determine if a brain area is sampling from options or more slowly accumulating evidence, we can record neural activity from that area, and decode the probability that the brain area is representing one option over the other as a subject is making a decision between those two options. If that probability starts somewhere in the middle and slowly moves to favor one option, then it is more likely that the brain area is acting as an evidence accumulator and not sampling. On the other hand, if the decoded probability flickers back and forth between options, it is more likely that the brain area is sampling.

Of course, we can’t just take a look at the decoded probability traces and decide which one it “looks like!” To quantify how sampling-like or accumulation-like the neural dynamics are, we will fit two different models to the neural activity and see which one best matches the neural activity. As an evidence-accumulation-like model, we’ll use a Gaussian process, which assumes the dependent variable is changing slowly over time. For a sampling-like model, we’ll use a hidden Markov model, which assumes the dependent variable depends on the current “hidden state,” which can change instantaneously between timesteps.

Setup

Let’s set up our computing environment:

# Packages

library(rstan)

library(ggplot2)

library(bayesplot)

library(invgamma)

library(bridgesampling)

rstan_options(auto_write = TRUE)

options(mc.cores = parallel::detectCores())

seed = 723456

# Colors

c_light <- c("#DCBCBC")

c_mid <- c("#B97C7C")

c_dark <- c("#8F2727")

c_blue_light <- c("#b2c4df")

c_blue_mid <- c("#6689bf")

c_blue_dark <- c("#3d5272")

color_scheme_set("red")

Gaussian Processes

The first model we’ll be fitting is a Gaussian process. These models rely on the assumption that the dependent variable (\( y \)) at a given independent variable value (in our case, time) will be more similar to the \( y \) value at timepoints nearby. They also assume that the distribution of \( y \) values is a Gaussian distribution. We can use a Gaussian process to model how the probability of an option, as decoded from neural activity, changes over time.

Vanilla Gaussian Process

The most confusing part about Gaussian processes (in my opinion) is that instead of thinking of your data as \( N \) datapoints which have \( y \) values in 1-dimensional space, you instead need to think of having a single datapoint which has a \( y \) value in \( N \)-dimensional space. To visualize this, let’s suppose we have just 2 datapoints - the first with a \( y \) value of \( 1 \), and the second with a \( y \) value of \( 1.5 \):

point_one = data.frame(x=c(1), y=c(1))

point_two = data.frame(x=c(2), y=c(1.5))

ggplot() +

geom_point(data=point_one, aes(x=x, y=y), color=c_blue_mid, size=5) +

geom_point(data=point_two, aes(x=x, y=y), color=c_mid, size=5) +

xlim(0, 3) + ylim(0, 2) +

ggtitle('Two Datapoints with 1D y-values')

We can think of the \( y \) values of these two datapoints as being a single point in 2-dimensional space, where the value in the first dimension is \( 1 \), and the value in the second dimension is \( 1.5 \):

one_point = data.frame(x=c(1), y=c(1.5))

ggplot(one_point) +

geom_vline(xintercept=1, color=c_blue_light, size=1) +

geom_hline(yintercept=1.5, color=c_light, size=1) +

geom_point(aes(x=x, y=y), color='black', size=5) +

xlim(0, 2) + ylim(0, 2) +

xlab('y value of first datapoint') +

ylab('y value of second datapoint') +

ggtitle('One Datapoint with 2D y-value')

Gaussian processes constrain points with similar \( x \) values to have similar \( y \) values by modeling them as being a single \( N \)-dimensional datapoint drawn from a Gaussian (normal) distribution with a given covarance structure. This “covariance structure” is defined by a covariance matrix \( \Sigma \), which depends on the \( x \) values. So, we can think of our \( y \) values as being drawn from a multivariate normal distribution with covariance matrix \( \Sigma \) (and also some mean \( \mu \), but here we’ll always use 0 for the mean).

\[\mathbf{y} \sim \mathcal{N}(\mu=0, \Sigma)\]And remember, the \( \mathbf{y} \) in the above equation is a single point in \( N \)-dimensional space representing the \( y \) values of all our datapoints, not point(s) in 1-dimensional space.

If the distance between the \( x \) values of two points is low, then the Gaussian distribution has a high covariance between those two dimensions, and the two points’ \( y \) values are also likely to be similar:

sigma = matrix(c(1,0.8,0.8,1), nrow=2)

data.grid = expand.grid(x=seq(-3, 3, length.out=200), y=seq(-3, 3, length.out=200))

q.samp = cbind(data.grid, prob=mvtnorm::dmvnorm(data.grid, sigma=sigma))

ggplot() +

geom_contour(data=q.samp, aes(x=x, y=y, z=prob), size=1, binwidth=0.08, color='black') +

geom_vline(xintercept=1, color=c_blue_light, size=1) +

geom_hline(yintercept=1.5, color=c_light, size=1) +

geom_point(data=one_point, aes(x=x, y=y), color='black', size=5) +

xlab('y value of first datapoint') +

ylab('y value of second datapoint') +

ggtitle('Joint probability distribution with high covariance')

If we take a vertical slice through this distribution (vertical line above), we can see that, given the value of the first datapoint, the probability of the value of the 2nd datapoint is pulled toward the value of the first datapoint.

x = cbind(rep(1, 300), seq(-2.5, 2.5, l=300))

p = mvtnorm::dmvnorm(x, sigma=sigma)

ggplot(data.frame(x=x, p=p)) +

geom_line(aes(x=x[,2], y=p), size=1, color=c_mid) +

geom_vline(xintercept=1.5, color=c_light, size=1) +

geom_vline(xintercept=1, color=c_blue_light, size=1) +

annotate("text", label="1st datapoint", x=0.9, y=0.02, angle=90) +

annotate("text", label="2nd datapoint", x=1.4, y=0.02, angle=90) +

xlab('y value of second datapoint') +

ylab('Probability') +

ggtitle('Probability of 2nd datapoint given 1st')

And if we take a horizontal slice through this distribution (horizontal line in the joint distribution plot above), we can see that, given the value of the second datapoint, the probability of the value of the first datapoint is pulled toward the value of the second datapoint.

x = cbind(seq(-2.5, 2.5, l=300), rep(1.5, 300))

p = mvtnorm::dmvnorm(x, sigma=sigma)

ggplot(data.frame(x=x, p=p)) +

geom_line(aes(x=x[,1], y=p), size=1, color=c_blue_mid) +

geom_vline(xintercept=1.5, color=c_light, size=1) +

geom_vline(xintercept=1, color=c_blue_light, size=1) +

annotate("text", label="1st datapoint", x=0.9, y=0.02, angle=90) +

annotate("text", label="2nd datapoint", x=1.4, y=0.02, angle=90) +

xlab('y value of first datapoint') +

ylab('Probability') +

ggtitle('Probability of 1st datapoint given 2nd')

In addition to being pulled towards the value of the other datapoint, notice that the probability distributions are also pulled towards \( 0 \). This is because the gaussian process assumes that the mean of all the data is \( 0 \) (or at least ours does, because we’re using a mean function of \( 0 \)).

On the other hand, if the \( x \) values of two datapoints are distant, then a Gaussian process forces there to be a low covariance between the two dimensions (we’ll see how below), and the value of one datapoint won’t depend very much on the value of the other. That is, the marginal probability of one datapoint given the value of the other will just be a normal distribution centered at 0, and won’t be affected by the value of the other datapoint.

m = c(0, 0)

sigma = matrix(c(1,0,0,1), nrow=2)

data.grid = expand.grid(x=seq(-3, 3, length.out=200), y=seq(-3, 3, length.out=200))

q.samp = cbind(data.grid, prob=mvtnorm::dmvnorm(data.grid, mean=m, sigma=sigma))

ggplot() +

geom_contour(data=q.samp, aes(x=x, y=y, z=prob), size=1, binwidth=0.04, color='black') +

geom_point(data=one_point, aes(x=x, y=y), color='black', size=5) +

xlab('y value of first datapoint') +

ylab('y value of second datapoint') +

ggtitle('Joint probability distribution with low covariance')

The covariance structure of the Gaussian distribution we’ve been talking about is defined by a covariance matrix \( \Sigma \). The covariance matrix is just a square matrix, where the value at row \( i \) and column \( j \) is computed using a covariance function given the \( x \) values of the \( i \)-th and \( j \)-th datapoints.

\[\Sigma_{i,j} = K(x_i,x_j)\]This covariance function (or “kernel”) is just a function that takes the \( x \)

values of two points, and returns a single value. With basic covariance

functions, this value is large if the two \( x \) values are close together, and

small when they’re far apart (although there are other types of covariance

functions which do things differently, such as periodic kernels).

We’ll use the “squared exponential” kernel (aka the

Gaussian kernel or the radial basis function kernel). For two data points which

have \( x \) values \( x_i \) and \( x_j \), the squared exponential kernel value between

them is:

This is just a Gaussian function where the distance between the two \( x \) values is the dependent variable.

cov_exp_quad <- function(x) exp(-x^2/2)

plot(cov_exp_quad, -4, 4, n=300, xlab=expression(x[i] - x[j]),

ylab=expression(K( x[i], x[j] )),

main="Squared Exponential Kernel",

yaxt='n', bty='n', lwd=3, col=c_blue_mid)

The \( \alpha \) and \( \rho \) parameters in the kernel function are two hyperparameters of the Gaussian process.

\( \alpha^2 \) is the signal variance (or “output” variance), because it controls how distant \( y \) values are likely to fall from 0 by scaling the value of all elements of the covariance matrix. A small \( \alpha \) value will yield a Gaussian process which hovers close to 0 (assuming you’re using 0 as your mean function, as we are). A larger \( \alpha \) value will yield a Gaussian process which can take values further from 0.

\( \rho \) is the length-scale parameter, because it controls how quickly the \( y \) values can change over time. Smaller values of \( \rho \) yield Gaussian processes whose \( y \) values change quickly over time, while larger values of \( \rho \) yield Gaussian processes with \( y \) values which change more slowly with time.

Finally, the \( \sigma^2 \) term of the kernel function is the noise standard deviation - because we’re assuming there is some noise associated with our observations. \( \delta_{ij} \) takes the value \( 1 \) when \( i \) and \( j \) are the same, and 0 otherwise - so we’re just adding \( \sigma^2 \) to the diagonal of \( \Sigma \).

Bounded Gaussian Process

So far we’ve assumed that the dependent variable can take any value! This isn’t the case when we’re looking at the probability of a brain area representing an option - the probability value must be between 0 and 1.

To account for this, we can pass our probability values (our \( y \) values) through the logit function, which transforms values between 0 and 1 to values between \( -\infty \) and \( \infty \). This “logit” function is the inverse of the sigmoidal (logistic) function.

\[\text{logit}(x) = \log \left( \frac{x}{1-x} \right)\]Then, we can perform our Gaussian process regression on the transformed data.

However, we can’t just transform the input values without transforming the

probability density appropriately! Because we’ve logit-transformed our values

to be between 0 and 1, our Gaussian process is now modeling points as being

drawn from a multivariate

logit-normal distribution,

instead of a normal multivariate normal distribution. (specifically a

logit-normal distribution in the case of sigmoidal elements, not simplex

elements).

A multivariate logistic normal distribution is defined between 0 and 1 in each of \( N \) dimensions,

\[\{ \mathbf{x} \in \mathbb{R}^N ~ | ~ \forall x_i, ~ 0 < x_i < 1 \}\]which is the space our data is in! That is, we have \( N \) datapoints (which we treat as a single datapoint in \( N \)-dimensional space), each of which falls between 0 and 1. The probability density function for the multivariate logistic normal distribution is

\[\mathcal{N}_{\text{logit}}(\mathbf{x};\mathbf{\mu},\mathbf{\Sigma}) = \frac{1}{\prod_{i=1}^D x_i (1-x_i)} \frac{1}{\sqrt{|2 \pi \Sigma |}} \exp \left( -\frac{1}{2} \left( \text{logit}(\mathbf{x})-\mathbf{\mu} \right)^\top \mathbf{\Sigma}^{-1} \left( \text{logit}(\mathbf{x})-\mathbf{\mu} \right) \right)\]Which is a lot of math - but it’s just equivalent to the value of a (regular) multivariate normal distribution given logit-transformed \( x \), times a scaling factor which ensures the density integrates to 1.

\[\mathcal{N}_{\text{logit}}(\mathbf{x};\mathbf{\mu},\mathbf{\Sigma}) = \frac{1}{\prod_{i=1}^D x_i (1-x_i)} \mathcal{N}(\text{logit}(\mathbf{x});\mathbf{\mu},\mathbf{\Sigma})\]Without this scaling factor, we would be logit-transforming the \( x \) values without appropriately scaling the probability density to compensate, and the probability density wouldn’t integrate to 1!

For more information on Gaussian processes in general, Carl Rasmussen and Christopher Williams have written a great textbook on Gaussian processes which is free.

Priors

This is a Bayesian analysis, and so we’ll be putting reasonable priors on our variables of interest. The priors represent our expectation of what values the parameters can reasonably take, prior to seeing the data. That is - how likely we think it is that a parameter can take a given value. For a Gaussian process, it’s important to have priors on our parameters, especially the length-scale parameter. Without it, the best-fit length scale parameter can explode to huge values or shrink to tiny values, because our data does not contain any information about shorter timescales than occur between our datapoints, or larger timescales than the duration of our data. Check out this great case study by Michael Betancourt for more about why that happens.

For the length scale parameter (\( \rho \)) we use an inverse gamma prior with \( \alpha=2 \) and \( \beta=0.5 \).

\[\frac{1}{\rho} \sim \text{Gamma}(\alpha=2, \beta=0.5)\]This results in a distribution which is defined \( >0 \), and has a low density near zero and at higher values. This prior beleif about the value of \( \rho \) will be incorporated into our Bayesian estimate of the value when we use Bayes rule to compute the posterior probability.

rho_prior <- function(x) dinvgamma(x, 2, 1/2)

plot(rho_prior, 0, 3, n=300, xlab='Rho', ylab='',

main="Length scale parameter prior",

yaxt='n', bty='n', lwd=3, col=c_blue_mid)

For the signal standard deviation parameter (\( \alpha \), aka “marginal” standard deviation or “ouput” standard deviation) we use a half-normal prior (a normal distribution but with zero density \( <0 \)) with \( \sigma=2 \).

\[\alpha \sim \mathcal{N}(\mu=0, \sigma=2), ~ \alpha \geq 0\]This results in a distribution which only has density \( \geq 0 \), and has a low density at higher values.

alpha_prior <- function(x) ifelse(x>=0, 2*dnorm(x, 0, 2), 0)

plot(alpha_prior, 0, 6, n=300, xlab='Alpha', ylab='',

main="Signal standard deviation parameter prior",

yaxt='n', bty='n', lwd=3, col=c_blue_mid)

For the noise standard deviation parameter (\( \sigma \)) we also use a half-normal prior, but with \( \sigma=1 \).

\[\sigma \sim \mathcal{N}(\mu=0, \sigma=1), ~ \alpha \geq 0\]This also results in a distribution which only has density \( \geq 0 \), and has a low density at higher values.

sigma_prior <- function(x) ifelse(x>=0, 2*dnorm(x, 0, 1), 0)

plot(sigma_prior, 0, 6, n=300, xlab='Sigma', ylab='',

main="Noise standard deviation parameter prior",

yaxt='n', bty='n', lwd=3, col=c_blue_mid)

Generating Data from a Gaussian Process

We’ll be using Stan to generate and fit Bayesian models of Gaussian processes and, later, hidden Markov models. Stan is a Bayesian modeling package which allows us to use the Stan language to describe a model, and then fit the model to data from R (Stan also has interfaces to Python, Matlab, etc).

A Stan program consists of several blocks. The first block describes what input data the model can expect, and the second block contains how to transform to that data before using it to fit or generate data. The “parameters” block contain a list of free parameters of the model, and the “model” block contains how to compute the posterior probability of the model given parameter values and the data. For now, we’re just generating data (and not fitting the model to data) so we’ll leave those blocks empty. Finally, the “generated quantities” block computes output data.

writeLines(readLines("simulate_lgp.stan"))

data {

int<lower=1> N; //number of datapoints

real x[N]; //x values

real<lower=0> rho; //length scale

real<lower=0> alpha; //marginal/output/signal standard deviation

real<lower=0> sigma; //noise standard deviation

}

transformed data {

matrix[N, N] K = cov_exp_quad(x, alpha, rho)

+ diag_matrix(rep_vector(1e-10, N));

matrix[N, N] L_K = cholesky_decompose(K);

}

parameters {}

model {}

generated quantities {

// Declare variables

vector[N] uf;

vector[N] uy;

vector[N] f;

vector[N] y;

// Function and samples in untransormed space

uf = multi_normal_cholesky_rng(rep_vector(0, N), L_K);

for (i in 1:N)

uy[i] = normal_rng(uf[i], sigma);

// Logit function and samples

f = inv_logit(uf);

y = inv_logit(uy);

}

We can generate data from a Gaussian process using this Stan model. Let’s generate 100 samples in the range 0 to 5.

# Data

N = 100

x = seq(0, 5, l=N)

We’ll also set the hyperparameters of the gaussian process.

# Parameters

rho = 0.5

alpha = 2

sigma = 0.3

Now we can run the Stan model, which will generate data from a Gaussian process with these parameters.

# Simulate

sim_params = list(N=N, x=x, rho=rho, alpha=alpha, sigma=sigma)

sim_gp = stan(file='simulate_lgp.stan', data=sim_params, iter=1,

chains=1, seed=seed, algorithm="Fixed_param")

SAMPLING FOR MODEL 'simulate_lgp' NOW (CHAIN 1).

Iteration: 1 / 1 [100%] (Sampling)

Elapsed Time: 0 seconds (Warm-up)

0 seconds (Sampling)

0 seconds (Total)

# Extract simulated output

f = extract(sim_gp)$f[1,]

y = extract(sim_gp)$y[1,]

sim_data = data.frame(x=x, y=y, f=f)

Let’s plot our generated Gaussian process:

# Plot generated data

ggplot(sim_data) +

geom_line(aes(x=x, y=f), size=1) +

geom_point(aes(x=x, y=y), color=c_mid) +

ggtitle('Simulated Gaussian Process')

The line above is the latent function which was generated, and the dots are the generated observations. Notice how the latent function varies smoothly over time, and varies between 0 and 1. The observations have more jitter than the latent function (because they are generated from that function but with added noise), but each point is in the neigborhood of the previous point, and they are also bounded between 0 and 1.

Fitting a Gaussian Process

Now that we’ve generated some data from a Gaussian process, we can attempt to fit a Gaussian process model to that data. We’ll use a separate Stan model, one which fits the parameters of the model to the data, instead of generating data from the parameters. That means this time our data block will only contain the data (and not the desired parameter values, because we’re fitting those), the parameter block will contain the free parameters of the model, and the model block will compute the posterior probability of the model given parameter values and the data. In Stan, “target” is a variable which stores the log posterior probability, so we’ll keep adding to it values which contribute to the posterior.

writeLines(readLines("lgp.stan"))

data {

int<lower=1> N; //number of datapoints

real x[N]; //x values

vector<lower=0, upper=1>[N] y; //y values (one N-dim datapoint)

}

transformed data {

vector[N] mu = rep_vector(0, N); //mean function

real ln_scale = -sum(log(y)+log(1-y)); //scale for logit normal dists

}

parameters {

real<lower=0> rho; //length scale

real<lower=0> alpha; //marginal/output/signal standard deviation

real<lower=0> sigma; //noise standard deviation

}

model {

// Decomposed covariance matrix

matrix[N, N] K = cov_exp_quad(x, alpha, rho)

+ diag_matrix(rep_vector(square(sigma), N));

matrix[N, N] L_K = cholesky_decompose(K);

// Priors

target += inv_gamma_lpdf(rho | 2, 0.5);

target += normal_lpdf(alpha | 0, 2) + log(2); //half-normal dists

target += normal_lpdf(sigma | 0, 1) + log(2); //mult density by 2

// Likelihood

target += multi_normal_cholesky_lpdf(logit(y) | mu, L_K);

// Add scale such that likelihood integrates to 1 over y

target += ln_scale;

}

(Side note: it’s not necessary to perform the Cholesky decomposition in

order to fit the model here - you can just draw y from a multivariate

normal distribution with covariance matrix K. The fit will actually

run faster that way. However I’ve done the Cholesky decomposition

because it can be used to find the predictive mean and variance, as is

done in kridging. We’re not

actually doing that here, because we’re primarily interested in model

comparison, but for most applications you’d want the predictive mean and

variance. Also, for only 100 datapoints this method doesn’t take all

that much longer anyway, even with the decomposition.)

Let’s fit the model to our generated data.

# Fit

stan_rdump(c("N", "x", "y"), file="simulated_lgp.data.R")

gp_data = read_rdump("simulated_lgp.data.R")

fit_gp = stan(file='lgp.stan', data=gp_data, seed=seed)

After MCMC sampling has finished, we can view the posterior intervals for each parameter.

# Show results of fit

print(fit_gp)

Inference for Stan model: lgp.

4 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=4000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

rho 0.48 0.00 0.05 0.38 0.45 0.48 0.52 0.58 2531 1

alpha 1.89 0.01 0.48 1.19 1.55 1.81 2.14 3.02 2465 1

sigma 0.31 0.00 0.02 0.27 0.29 0.31 0.33 0.36 2454 1

lp__ 145.06 0.03 1.24 141.81 144.50 145.39 145.95 146.46 1653 1

Before checking that our fits recover the true parameters which were used to generate the data, we’ll run a few diagnostics to ensure that the MCMC sampling went smoothly and that our model isn’t misspecified.

First, we’ll check that the MCMC chains converged. The Rhat value in

the table above should be close to 1. The posterior distributions should

also look the same across chains (“chains” are independent runs of the fit,

so each run should have come up with the same answer if our model is

appropriate for our data and we ran enough MCMC iterations).

# Plot density per chain

posterior = as.array(fit_gp)

mcmc_dens_overlay(posterior, pars=c("rho", "alpha", "sigma"))

The distributions look similar between chains, which is good! This should also be apparent in the trace plot (the plot of posterior sample values vs sample number), and there shouldn’t be any obvious “sliding” over time.

# Plot trace per chain

mcmc_trace(posterior, pars=c("rho", "alpha", "sigma"))

Again, everything looks good here.

We should also check that none of the iterations hit the max tree depth.

# Check no chains hit max tree depth

check_treedepth(fit_gp)

0 of 4000 iterations saturated the maximum tree depth of 10.

Another diagnostic to check is the energy Bayesian fraction of missing information (E-BFMI).

# Check the energy Bayesian Fraction of Missing Information

check_energy(fit_gp)

E-BFMI indicated no pathological behavior.

Finally, we should check that iterations did not end with a divergence, indicating areas of parameter space that the sampling was not able to explore well.

# Check for divergences

check_divergences(fit_gp)

0 of 4000 iterations ended with a divergence.

All our diagnostics seem to indicate that we were able to fit the data without any sampling issues! Now we can see how the posterior distributions for the parameters match up to the true parameters we used to generate the data. First let’s define a function which will plot our posterior distributions from the MCMC samples and their 95% confidence intervals.

# Function to plot posterior distributions w/ 95% confidence intervals

interval_density = function(x, bot=0.025, top=0.975,

main="", xlab="", ylab="",

xlim=c(min(x),max(x)), lwd=1,

col1=c("#DCBCBC"), col2=c("#B97C7C")) {

dens = density(x[x>xlim[1] & x<xlim[2]])

plot(dens, main=main, xlab=xlab, ylab=ylab, xlim=xlim,

lwd=lwd, yaxt='n', bty='n', type='n')

polygon(dens, col=col1, border=NA)

qbot <- quantile(x, bot)

qtop <- quantile(x, top)

x1 = min(which(dens$x >= qbot))

x2 = max(which(dens$x < qtop))

with(dens, polygon(x=x[c(x1,x1:x2,x2)], y=c(0, y[x1:x2], 0), col=col2, border=NA))

}

And now we can view the posteriors as compared to the true parameter values which were used to generate the data.

# Plot true vs posterior

posterior = extract(fit_gp)

par(mfrow=c(1, 3))

interval_density(posterior$rho, xlab="rho")

abline(v=rho, col=c_dark, lwd=3)

interval_density(posterior$alpha, xlab="alpha")

abline(v=alpha, col=c_dark, lwd=3)

interval_density(posterior$sigma, xlab="sigma")

abline(v=sigma, col=c_dark, lwd=3)

The true parameter values are all within the bulk of the posterior, meaning we were able to successfully recover the true parameters!

Hidden Markov Models

The other model we’ll be fitting is a hidden Markov model. In contrast to the Gaussian process, which assumed the \( y \) value was changing slowly over time, hidden Markov models assume that the process generating the data is switching - instantaneously - between states. The states are “hidden”, in that we don’t know for sure what state the system is in at any given time, but each state has a unique observation pattern associated with it. In our case, we’re concerned with the decoded probability flickering back and forth between near-0 and near-1, as would be expected if the brain area we’re decoding from is sampling options. So, our hidden Markov model will have 2 states, and the chance of observing a \( y \) value near \( 0 \) will be high in one state, and low in the other.

The Math

Our hidden Markov model will have two hidden states (\( N=2 \)). This means that our model has two free parameters which control the transition probabilities \( \phi \). The probability of transitioning from state \( i \) to state \( j \) is \( \phi_{i,j} \). In our Stan model, we’ll fit the “recurrent” transition probabilities - that is, the probability that the hidden state at the current timestep will be the same as the last timestep. We don’t have to fit the non-recurrent transition probabilities because they form a simplex with the recurrent transition probabilities (that is, with only two states, the non-recurrent transition probabilities have to be \( 1 \) minus the recurrent transition probabilities, and vice-versa, since the sum of the two must sum to \( 1 \)). So, the two free parameters which control the transition probabilities are:

\[\phi_{i,i} ~ \text{for} ~ i \in \{1,2\}\]We model the probability of observing a certain value for \( y \), given the current hidden state, as a beta distribution. I’ll refer to this as the “observation model”, because it’s the part of the model which controls how the hidden states relate to the outcomes (\( y \) values) we observe. However, for each hidden state, one parameter of the distribution is fixed at \( 1 \), and the other parameter is free. This gives us two additional parameters (\( \theta_1 \) and \( \theta_2 \)) which control the observation probabilities corresponding to the hidden states. So for the first state \( x_1 \),

\[y|x_1 \sim \text{Beta}(1, \theta_1)\]and for the second state \( x_2 \),

\[y|x_2 \sim \text{Beta}(\theta_2, 1)\](Side note: we don’t have to use a beta distribution for our observation model, we just need a distribution bounded between 0 and 1, which is greater towards one extreme than the other. We could also have used a 1-dimensional logit-normal distribution, and constrained \( \mu \) to be \( >0 \) for one state and \( <0 \) for the other state. I decided to use a beta distribution just because it’s simpler - there’s only 1 free parameter here per hidden state, whereas a logit-normal distribution would have two.)

The reason the beta distribution has flipped parameters for one state (that is, \( \alpha=1,\beta=\theta_1 \) for one state and \( \alpha=\theta_2,\beta=1 \) for the other) is so the first state will always correspond to \( y \) values which are closer to 0, and the second state will always correspond to \( y \) values which are closer to 1. If we allow both parameters of the beta distribution to be free for both states, our resulting posterior distributions will be bimodal. This is because the first state could correspond to low \( y \) values, and the second to high \( y \) values, or vice versa - both would be equally likely!

Setting one parameter of the beta distribution to \( 1 \) also ensures that the

resulting beta distributions are monotonic - that is, the probability value is

always greater towards the corresponding state’s extreme (either 0 or 1).

Plotted below are these beta distributions with different values of \( \theta \).

The blue lines correspond to the first state, and the red lines to the second

state. Darker shades indicate larger values of \( \theta \).

x = seq(0, 1, l=300)

df = data.frame(x = x,

s11 = dbeta(x, 1, 1.2),

s12 = dbeta(x, 1, 3),

s13 = dbeta(x, 1, 5),

s21 = dbeta(x, 1.2, 1),

s22 = dbeta(x, 3, 1),

s23 = dbeta(x, 5, 1))

ggplot(df) +

geom_line(aes(x=x, y=s11), color=c_blue_light, size=1) +

geom_line(aes(x=x, y=s12), color=c_blue_mid, size=1) +

geom_line(aes(x=x, y=s13), color=c_blue_dark, size=1) +

geom_line(aes(x=x, y=s21), color=c_light, size=1) +

geom_line(aes(x=x, y=s22), color=c_mid, size=1) +

geom_line(aes(x=x, y=s23), color=c_dark, size=1) +

xlab('y value') +

ylab('Observation Probability') +

ggtitle('Observation Probabilities for each state, given different parameters')

Finally, to compute the probability of the model over all possible sequences of hidden states (marginalize over the hidden states), we will use the forward algorithm. A hidden Markov model assumes that the state at a given timepoint only affects the probability of the hidden state at the next timepoint (and not states at further timepoints). Therefore, we can compute the probability of being in the current state, given any previous sequence of hidden states, in a recursive manner. Suppose we’ve computed the probability of being in state \( 1 \) at timepoint \( t \) given any previous sequence of hidden states (the “marginal probability”, let’s call it \( \gamma(t,1) \)), and the same for state \( 2 \) (\( \gamma(t,2) \)). Then, we can compute the probability of being in state \( i \) at time \( t+1 \) with just the previous marginal probabilities (\( \gamma(t,1) \) and \( \gamma(t,2) \)), the transition probabilities (\( \phi \)), and the observation probabilities (\( p(y|x_j) \), which we defined earlier in terms of beta distributions).

\[\gamma(t,i) = p(y_t | x_t) \sum_{j \in \{1, 2\}} \phi_{j,i} ~ \gamma(t-1,j)\]The sum above is over possible states \( j \) (so only \( 1 \) and \( 2 \) in our case). This equation is just computing the probability of arriving at state \( i \) from any other state, times the probability of our observation given that we’re in that hidden state.

Priors

For the observation model parameters (\( \theta \)) we use a gamma prior with \( \alpha,\beta=2 \), and limit the values to be \( \geq 1 \),

\[\theta_i - 1 \sim \text{Gamma}(\alpha=2, \beta=2)\]This results in a distribution which has zero density \( <1 \), and low density at higher values.

theta_prior <- function(x) dgamma(x-1, 2, 1/2)

plot(theta_prior, 0, 10, n=300, xlab='Theta', ylab='',

main="Observation model parameter prior",

yaxt='n', bty='n', lwd=3, col=c_blue_mid)

(Side note: the notation above and the Stan programs below use the

\( \text{Gamma}(\alpha,\beta) \) parameterization of the gamma distribution, while

the R function dgamma uses the \( \text{Gamma}(\kappa,\theta) \) parameterization,

where \( \kappa=\alpha \) and \( \theta=1/\beta \), in case you were wondering why the

parameters in the call to dgamma were \( 2,1/2 \) instead of \( 2,2 \))

For the recurrent transition probability prior, we use a beta distribution with \( \alpha,\beta=1.2 \),

\[\phi_{i,i} \sim \text{Beta}(1.2, 1.2)\]This results in a “toast-shaped” distribution which is bounded between \( 0 \) and \( 1 \), and has lower density towards more extreme values.

trans_prior <- function(x) dbeta(x, 1.2, 1.2)

plot(trans_prior, 0, 1, n=300, xlab='Phi_{i,i}', ylab='',

main="Recurrent transition probability prior",

type='l', yaxt='n', bty='n', lwd=3, col=c_blue_mid)

Generating Data from a Hidden Markov Model

Now we’ll build a Stan routine to generate data from a hidden Markov model with certain parameters. In the generated quantities block, we’ll simulate the hidden Markov model and generate our data. We’ll start in a random hidden state, and transition to a different state (or the same state) at each time step randomly according to the transition probabilities. At each timestep, we’ll generate a \( y \) value from the observation parameters of that state.

writeLines(readLines("simulate_hmm.stan"))

data {

int<lower=1> N; //number of observations to generate

vector[2] phi[2]; //transition probabilities

real<lower=0> theta[2]; //observation distribution params

}

transformed data {

real thetas[2,2]; //observation beta distribution parameters

thetas[1,1] = 1;

thetas[1,2] = theta[1];

thetas[2,1] = theta[2];

thetas[2,2] = 1;

}

parameters {}

model {}

generated quantities {

// Declare variables

real y[N]; //observations

int<lower=1> s[N]; //hidden states

// Generate hidden state sequence and observations

s[1] = categorical_rng(rep_vector(0.5, 2)); //start at random state

y[1] = beta_rng(thetas[s[1],1], thetas[s[1],2]); //generate observation

for (t in 2:N) {

s[t] = categorical_rng(phi[s[t-1]]); //new state

y[t] = beta_rng(thetas[s[t],1], thetas[s[t],2]); //generate observation

}

}

We can use this Stan model to generate data from our hidden Markov model. Let’s generate 100 samples.

# Data

N = 100

We will also set the parameters of the HMM.

# Parameters

phi = array(c(0.9, 0.1, 0.1, 0.9), dim=c(2,2)) #transition probabilities

theta = c(5, 5) #observation distribution parameters

Now we can run the Stan model, which will generate data from a hidden Markov model with these parameters.

# Simulate

sim_params = list(N=N, phi=phi, theta=theta)

sim_hmm = stan(file='simulate_hmm.stan', data=sim_params, iter=1,

chains=1, seed=seed, algorithm="Fixed_param")

SAMPLING FOR MODEL 'simulate_hmm' NOW (CHAIN 1).

Iteration: 1 / 1 [100%] (Sampling)

Elapsed Time: 0 seconds (Warm-up)

0.001 seconds (Sampling)

0.001 seconds (Total)

# Extract simulated output

s = t(extract(sim_hmm)$s)-1

y = t(extract(sim_hmm)$y)

sim_data = data.frame(x=seq(N), s=s, y=y)

Let’s plot the data generated by our hidden Markov model:

# Plot generated data

ggplot(sim_data) +

geom_line(aes(x=x, y=s), size=1) +

geom_point(aes(x=x, y=y), color=c_mid) +

ggtitle('Simulated Hidden Markov Model')

In contrast to the Gaussian process, data generated by the hidden Markov model does not vary smoothly over time, but instead jumps back and forth between extremes. Because of the way we defined the observation part of the model, observations during the first hidden state are close to 0, and observations during the second hidden state are close to 1. However, all observations are between 0 and 1, and there is again some noise to the observations.

Fitting a Hidden Markov Model

Now that we’ve generated some data from a Gaussian process, we can attempt to fit a Gaussian process model to that data. We’ll use a separate Stan model, one which fits the parameters of the model to the data, instead of generating data from the parameters.

writeLines(readLines("hmm.stan"))

data {

int<lower=1> N; //number of observations

real<lower=0, upper=1> y[N]; //observations

}

parameters {

simplex[2] phi[2]; //transition probabilities

real<lower=1> theta[2]; //observation distribution params

}

model {

// Priors

target += gamma_lpdf(theta[1]-1 | 2, 2);

target += gamma_lpdf(theta[2]-1 | 2, 2);

target += beta_lpdf(phi[1,1] | 1.2, 1.2);

target += beta_lpdf(phi[2,2] | 1.2, 1.2);

// Compute the marginal probability over possible sequences

{

real acc[2];

real gamma[N, 2];

gamma[1,1] = beta_lpdf(y[1] | 1, theta[1]);

gamma[1,2] = beta_lpdf(y[2] | theta[2], 1);

for (t in 2:N) {

for (k in 1:2) {

acc[1] = gamma[t-1, 1] + log(phi[1,k]);

acc[2] = gamma[t-1, 2] + log(phi[2,k]);

gamma[t,k] = log_sum_exp(acc);

}

gamma[t,1] += beta_lpdf(y[t] | 1, theta[1]);

gamma[t,2] += beta_lpdf(y[t] | theta[2], 1);

}

target += log_sum_exp(gamma[N]);

}

}

Let’s fit the model to our generated data.

# Fit

y = y[,1]

stan_rdump(c("N", "y"), file="simulated_hmm.data.R")

hmm_data = read_rdump("simulated_hmm.data.R")

fit_hmm = stan(file='hmm.stan', data=hmm_data, seed=seed)

After MCMC sampling has finished, we can view the posterior intervals for each parameter.

# Show results of fit

print(fit_hmm)

Inference for Stan model: hmm.

4 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=4000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

phi[1,1] 0.83 0.00 0.06 0.70 0.79 0.84 0.88 0.93 4000 1

phi[1,2] 0.17 0.00 0.06 0.07 0.12 0.16 0.21 0.30 4000 1

phi[2,1] 0.09 0.00 0.04 0.03 0.07 0.09 0.12 0.18 4000 1

phi[2,2] 0.91 0.00 0.04 0.82 0.88 0.91 0.93 0.97 4000 1

theta[1] 4.56 0.01 0.83 3.15 3.96 4.50 5.11 6.31 4000 1

theta[2] 4.54 0.01 0.60 3.43 4.13 4.52 4.95 5.76 4000 1

lp__ 41.86 0.03 1.43 38.25 41.15 42.15 42.89 43.65 1949 1

Again we’ll check several diagnoistics to ensure our sampling effectively explored the parameter space. The Rhat values look good, and if we look at the posterior distributions per chain, it appears the chains converged.

# Plot density per chain

posterior = as.array(fit_hmm)

mcmc_dens_overlay(posterior,

pars=c("phi[1,1]", "phi[2,2]", "theta[1]", "theta[2]"))

The trace plots also indicate that the chains (likely) explored similar areas of parameter space, and that the warmup period was (probably) long enough.

# Plot trace per chain

mcmc_trace(posterior,

pars=c("phi[1,1]", "phi[2,2]", "theta[1]", "theta[2]"))

Our other three diagnostics also look good:

# Check other diagnostics

check_treedepth(fit_hmm)

0 of 4000 iterations saturated the maximum tree depth of 10.

check_energy(fit_hmm)

E-BFMI indicated no pathological behavior.

check_divergences(fit_hmm)

0 of 4000 iterations ended with a divergence.

Now we can see how the posterior distributions for the parameters match up to the true parameters we used to generate the data. We’ll also plot the prior distributions for each parameter so we can see how that effects the posterior. The prior distribution is plotted in blue, the posterior distribution in red, and the parameter value used to generate the data as a dark red vertical line.

# Plot true vs posterior

posterior = extract(fit_hmm)

par(mfrow=c(2, 2))

beta_prior <- function(x) dbeta(x, 1.2, 1.2)

gamma_prior <- function(x) dgamma(x, 2, 1/2)

interval_density(posterior$phi[,1,1], xlab="phi[,1,1]", xlim=c(0,1))

plot(beta_prior, 0, 1, add=TRUE, col=c_blue_light, lwd=3)

abline(v=phi[1,1], col=c_dark, lwd=3)

interval_density(posterior$phi[,2,2], xlab="phi[,2,2]", xlim=c(0,1))

plot(beta_prior, 0, 1, add=TRUE, col=c_blue_light, lwd=3)

abline(v=phi[2,2], col=c_dark, lwd=3)

interval_density(posterior$theta[,1], xlab="theta[1]", xlim=c(0,12))

plot(gamma_prior, 0, 12, add=TRUE, col=c_blue_light, lwd=3)

abline(v=theta[1], col=c_dark, lwd=3)

interval_density(posterior$theta[,2], xlab="theta[2]", xlim=c(0,12))

plot(gamma_prior, 0, 12, add=TRUE, col=c_blue_light, lwd=3)

abline(v=theta[2], col=c_dark, lwd=3)

Again we were able to recover the parameters of the model which generated the data!

Model Comparison using Bridge Sampling

What we’re really interested in is not just fitting the models to data, but comparing the models to see which one is more likely to explain our data. We’ll use a Bayes factor to compare the plausibility of our models, given the data. A Bayes factor is the ratio of the marginal probabilities for our two models (\( \mathcal{M}_1 \) and \( \mathcal{M}_2 \)).

\[\text{Bayes factor}~(\mathcal{M}_1, \mathcal{M}_2) = \frac{p(y ~ | ~ \mathcal{M}_1)}{p(y ~ | ~ \mathcal{M}_2)}\]Bayes factors greater than 1 indicate that model \( \mathcal{M}_1 \) is more likely to explain the data. The marginal likelihood of each model (\( p(y ~ | ~ \mathcal{M}) \)) is the sum of the posterior probability of the model across all possible combinations of parameter choices:

\[p(y~|~\mathcal{M}) = \int p(y~|~\theta, \mathcal{M}) ~ p(\theta ~ | ~ \mathcal{M}) d\theta\]where \( \theta \) is a set of parameter values, \( p(y~|~\theta, \mathcal{M}) \) is the likelihood of the model given that set of parameter values, and \( p(\theta ~ | ~ \mathcal{M}) \) is the prior probability of that choice of parameter values.

Unfortunately, that integral is difficult to evaluate, because it’s an integral in a space with dimensionality equal to the number of parameters in the model! Our models may have only three to four parameters, but keep in mind that if we want to apply these models to real experimental data, we’ll want to use multilevel models, where each subject gets their own set of parameters, and then there will be additional hyperparameters which constrain individuals’ parameter values. So, if we only have four parameters, with just 25 subjects, we’ll suddenly end up with upwards of a hundred parameters! And evaluating an integral in one-hundred-dimensional space is… hard. Or at least extremely computationally expensive.

Instead of directly evaluating the above integral to compute the marginal probability, we’ll estimate the value using a technique called “bridge sampling.”

Bridge Sampling

Bridge sampling is a method for estimating the marginal likelihood when you have MCMC samples from the posterior distribution (which we get when we fit the models with Stan!). I won’t get into the math of bridge sampling, but there’s a great tutorial on bridge sampling if you’re interested. There is also a bridgesampling package for R which we’ll be using to compute the Bayes factors between our models.

Comparing GP and HMM models with Bridge Sampling

Let’s use bridge sampling to compare the marginal likelihoods of each model given the data that we generated using a Gaussian process. If everything is working correctly, the Bayes factor should favor the Gaussian process model over the hidden Markov model (because we used the Gaussian process to generate the data in the first place!).

First we have to fit the hidden Markov model to the data generated by a Gaussian process (we’ve already fit the GP model to the GP-generated data, so we won’t re-do that here).

# Fit HMM to GP-generated data

gp_data = read_rdump("simulated_lgp.data.R")

fit_hmm_to_gp = stan(file='hmm.stan', data=gp_data, seed=seed)

We are able to fit the hidden Markov model to the data generated by the Gaussian process without problems.

# Check MCMC diagnostics

check_treedepth(fit_hmm_to_gp)

check_energy(fit_hmm_to_gp)

check_divergences(fit_hmm_to_gp)

0 of 4000 iterations saturated the maximum tree depth of 10.

E-BFMI indicated no pathological behavior.

0 of 4000 iterations ended with a divergence.

The resulting fit looks reasonable.

print(fit_hmm_to_gp)

Inference for Stan model: hmm.

4 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=4000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

phi[1,1] 0.94 0.00 0.04 0.85 0.92 0.95 0.97 0.99 4000 1

phi[1,2] 0.06 0.00 0.04 0.01 0.03 0.05 0.08 0.15 4000 1

phi[2,1] 0.04 0.00 0.02 0.01 0.02 0.03 0.05 0.09 4000 1

phi[2,2] 0.96 0.00 0.02 0.91 0.95 0.97 0.98 0.99 4000 1

theta[1] 4.68 0.01 0.90 3.14 4.05 4.61 5.23 6.63 4000 1

theta[2] 2.49 0.01 0.33 1.89 2.25 2.47 2.69 3.20 4000 1

lp__ 33.32 0.03 1.44 29.76 32.60 33.62 34.40 35.14 1852 1

# Plot true vs posterior

posterior = extract(fit_hmm_to_gp)

par(mfrow=c(2, 2))

interval_density(posterior$phi[,1,1], xlab="phi[,1,1]")

interval_density(posterior$phi[,2,2], xlab="phi[,2,2]")

interval_density(posterior$theta[,1], xlab="theta[1]")

interval_density(posterior$theta[,2], xlab="theta[2]")

However, with data generated by the Gaussian process, the Bayes factor favors the Gaussian process over the hidden Markov model!

# Perform bridge sampling and view Bayes factor for GP-generated data

bridge_gp_gp = bridge_sampler(fit_gp)

bridge_hmm_gp = bridge_sampler(fit_hmm_to_gp)

lbf1 = bf(bridge_gp_gp, bridge_hmm_gp, log=TRUE)

cat(sprintf("Log Bayes factor in favor of GP over HMM on GP-generated data: %0.3g\n", lbf1$bf))

Log Bayes factor in favor of GP over HMM on GP-generated data: 107

We can also see the error of the two marginal probability estimates.

cat(sprintf("Error percentage for GP fit to GP-generated data: %s\n",

error_measures(bridge_gp_gp)$percentage))

cat(sprintf("Error percentage for HMM fit to GP-generated data: %s\n",

error_measures(bridge_hmm_gp)$percentage))

Error percentage for GP fit to GP-generated data: 0.313%

Error percentage for HMM fit to GP-generated data: 0.449%

These error measures will help us determine how confident we can be that the estimated Bayes factor reflects which model truly has a larger marginal probability. In this case, the difference between the two estimated log marginal probabilities (the Bayes factor) is large, and the error on those estimates is small, and so we can be confident that the Gaussian process is indeed fitting the data better than the hidden Markov model. Which, of course, it should - because the data was generated by a Gaussian process!

Similarly, we want to ensure that the Bayes factor favors the hidden Markov model over the Gaussian process when fitting to the data generated by the hidden Markov model.

# Fit GP to HMM-generated data

hmm_data = read_rdump("simulated_hmm.data.R")

hmm_data$x = gp_data$x

fit_gp_to_hmm = stan(file='lgp.stan', data=hmm_data, seed=seed)

We are able to fit the Gaussian process model to the data generated by the hidden Markov model without problems.

# Check MCMC diagnostics

check_treedepth(fit_gp_to_hmm)

check_energy(fit_gp_to_hmm)

check_divergences(fit_gp_to_hmm)

0 of 4000 iterations saturated the maximum tree depth of 10.

E-BFMI indicated no pathological behavior.

0 of 4000 iterations ended with a divergence.

And the resulting fit looks reasonable.

print(fit_gp_to_hmm)

Inference for Stan model: lgp.

4 chains, each with iter=2000; warmup=1000; thin=1;

post-warmup draws per chain=1000, total post-warmup draws=4000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

rho 0.14 0.00 0.04 0.09 0.11 0.13 0.15 0.26 1591 1

alpha 2.07 0.01 0.37 1.45 1.81 2.04 2.30 2.85 2238 1

sigma 1.63 0.00 0.16 1.35 1.51 1.61 1.73 1.98 1981 1

lp__ 36.17 0.04 1.34 32.86 35.49 36.49 37.18 37.76 1336 1

# Plot true vs posterior

posterior = extract(fit_gp_to_hmm)

par(mfrow=c(1, 3))

interval_density(posterior$rho, xlab="rho", xlim=c(0, 0.4))

interval_density(posterior$alpha, xlab="alpha")

interval_density(posterior$sigma, xlab="sigma")

However, with data generated by the hidden Markov model, the Bayes factor favors the hidden Markov model over the Gaussian process!

# Perform bridge sampling and view Bayes factor for HMM-generated data

bridge_hmm_hmm = bridge_sampler(fit_hmm)

bridge_gp_hmm = bridge_sampler(fit_gp_to_hmm)

lbf2 = bf(bridge_hmm_hmm, bridge_gp_hmm, log=TRUE)

cat(sprintf("Log Bayes factor in favor of HMM over GP on HMM-generated data: %0.3g\n", lbf2$bf))

Log Bayes factor in favor of HMM over GP on HMM-generated data: 8.01

Again we’ll check the error of the marginal probability estimates.

cat(sprintf("Error percentage for GP fit to HMM-generated data: %s\n", error_measures(bridge_gp_hmm)$percentage))

cat(sprintf("Error percentage for HMM fit to HMM-generated data: %s\n", error_measures(bridge_hmm_hmm)$percentage))

Error percentage for GP fit to HMM-generated data: 0.609%

Error percentage for HMM fit to HMM-generated data: 0.271%

The difference between the two marginal probability estimates isn’t as huge this time, though the error of the estimates is still pretty small. Just in case, let’s check the lower bound of the Bayes factor estimate.

bf_lb = (bridge_hmm_hmm$logml -

0.01*as.numeric(sub("%", "", error_measures(bridge_hmm_hmm)$percentage))) -

(bridge_gp_hmm$logml +

0.01*as.numeric(sub("%", "", error_measures(bridge_gp_hmm)$percentage)))

cat(sprintf("Lower bound of Bayes factor estimate in favor of HMM over GP on HMM-generated data: %0.3g\n", bf_lb))

Lower bound of Bayes factor estimate in favor of HMM over GP on HMM-generated data: 8.01

Finally, the log marginal likelihood of the Gaussian process was greater when fit to data generated by a Gaussian process than when fit to data generated by a hidden Markov model.

cat(sprintf("Log marginal likelihood of GP fit to GP-generated data: %0.3g\n", bridge_gp_gp$logml))

cat(sprintf("Log marginal likelihood of GP fit to HMM-generated data: %0.3g\n", bridge_gp_hmm$logml))

Log marginal likelihood of GP fit to GP-generated data: 143

Log marginal likelihood of GP fit to HMM-generated data: 34.7

And the log marginal likelihood of the hidden Markov model was greater when fit to data generated by a HMM than when fit to data generated by a Gaussian process.

cat(sprintf("Log marginal likelihood of HMM fit to HMM-generated data: %0.3g\n", bridge_hmm_hmm$logml))

cat(sprintf("Log marginal likelihood of HMM fit to GP-generated data: %0.3g\n", bridge_hmm_gp$logml))

Log marginal likelihood of HMM fit to HMM-generated data: 42.7

Log marginal likelihood of HMM fit to GP-generated data: 35.5

Conclusion

By fitting both the Gaussian process model and the hidden Markov model to neural data, we can use bride sampling to find which model is more likely to explain the neural activity. Of course that will have to happen in a paper and not in a blog post!

There are a few other details we need to worry about when applying these models to real data. First, the Stan models above assumed a single process evolving over a short period of time. However, we’re studing neural activity during tasks which have repeated trials. So, in order to evaluate the model likelihoods over all trials, we have to alter the Stan code to sum the log probability over all trials. Also, with real data we have multiple subjects, which means we have to build a multilevel model which allows the parameters to vary slightly between subjects. I’ve written a follow-up post on how to implement those models.

It’s also important to realize that even if the Bayes factor favors one of these models over the other, that doesn’t imply that model explains how the neural activity is being generated. Rather, it just shows that the dynamics of the neural activity better match one model than the other. The Bayes factor between the Gaussian process and the hidden Markov model will only tell us whether the neural representation of reward appears to be flicking back and forth between options (as would be predicted by sampling/deliberative theories of decision-making), or more slowly varying over time (as would be predicted by drift diffusion or integrate-to-threshold theories). Ultimately, how the brain area of interest comes to represent reward in that way can only be understood by studying the inputs to that area, the connectivity within that area and its inputs, along with what information those inputs are representing and when.